Concept

Contents

Concept#

Joint PMF (Discrete Random Variables)#

Definition 59 (Joint PMF)

Let \(X\) and \(Y\) be two discrete random variables with sample spaces \(\S_X\) and \(\S_Y\) respectively.

Let \(\eventA = \lset X(\omega) = x, Y(\xi) = y \rset\) be any event in the sample space \(\S_X \times \S_Y\).

The joint probability mass function (joint PMF) of \(X\) and \(Y\) of the event \(\eventA\) is defined as a function \(\pmfjointxy(x, y)\) that can be summed to yield a probability

where \(\P\) is the probability function defined over the probability space \(\pspace\).

Remark 8 (Joint PMF)

Consider \(\eventA = \lset X(\omega) = x, Y(\xi) = y \rset\). Then \(\eventA\) is an event in the sample space \(\S_X \times \S_Y\). The size of the event \(\eventA\) is

where the sum is over all the possible outcomes in \(\eventA\).

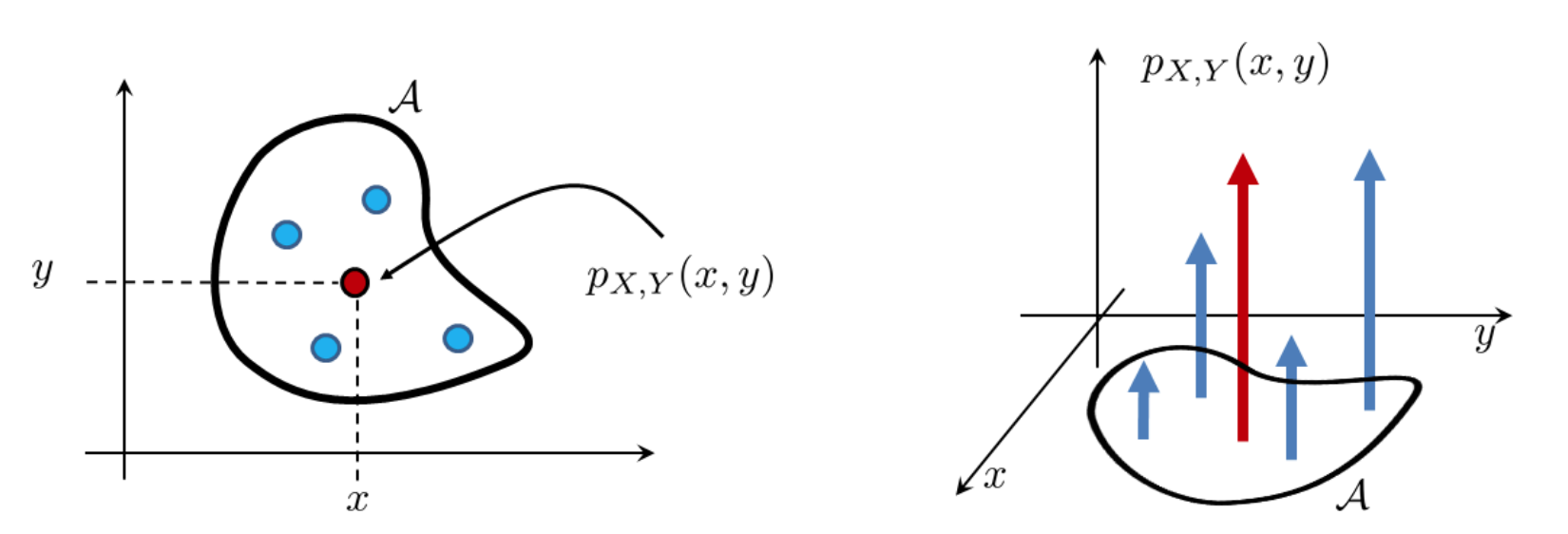

Pictorially represented below in Fig. 8, the joint PMF is a 2D array of impulses.

Fig. 8 A joint PMF for a pair of discrete random variables consists of an array of impulses. To measure the size of the event \(\eventA\), we sum all the impulses inside \(\eventA\). Image Credit: [Chan, 2021].#

Joint PDF (Continuous Random Variables)#

Definition 60 (Joint PDF)

Let \(X\) and \(Y\) be two continuous random variables with sample spaces \(\S_X\) and \(\S_Y\) respectively.

Let \(\eventA \subseteq \S_X \times \S_Y\) be any event in the sample space \(\S_X \times \S_Y\).

Then, the joint PDF of \(X\) and \(Y\) of the event \(\eventA\) is defined as a function \(\pdfjointxy(x, y)\) that can be integrated to yield a probability

Remark 9 (Joint PDF)

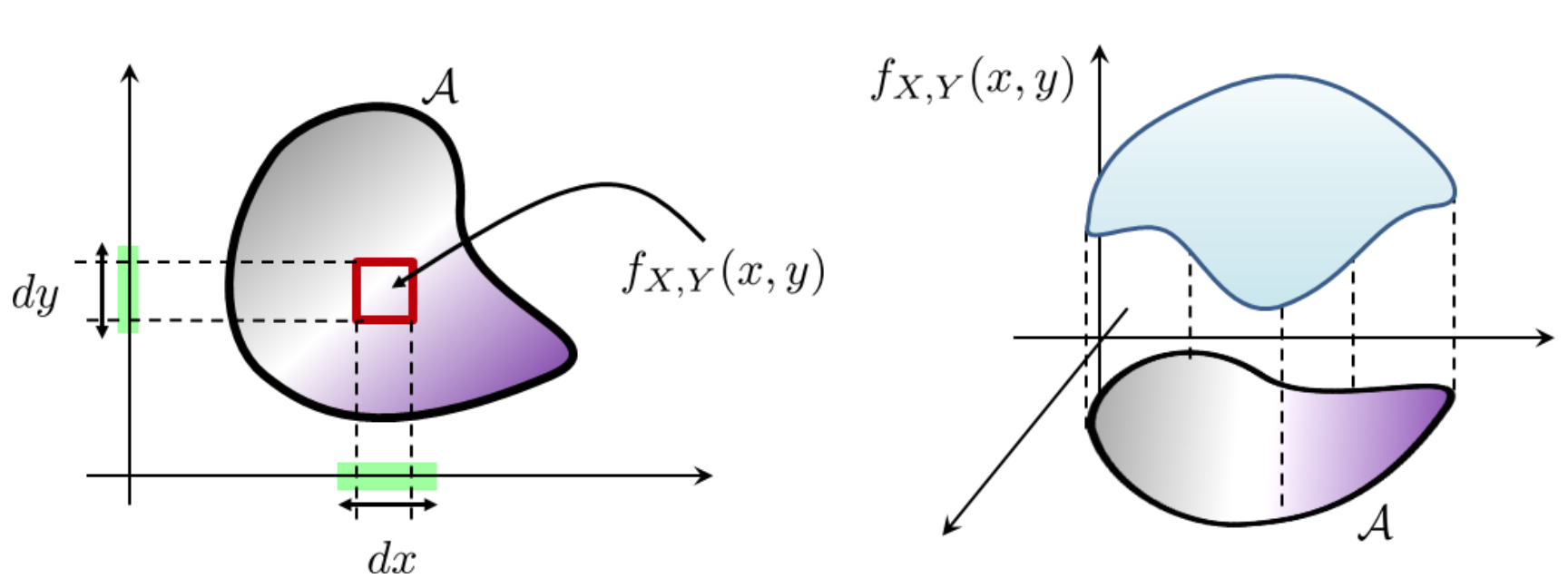

Pictorially, we can view \(f_{X, Y}\) as a 2D function where the height at a coordinate \((x, y)\) is \(f_{X, Y}(x, y)\), as can be seen from Fig. 9. To compute the probability that \((X, Y) \in \mathcal{A}\), we integrate the function \(f_{X, Y}\) with respect to the area covered by the set \(\mathcal{A}\). For example, if the set \(\mathcal{A}\) is a rectangular box \(\mathcal{A}=[a, b] \times[c, d]\), then the integration becomes [Chan, 2021]

Fig. 9 A joint PDF for a pair of continuous random variables is a surface in the 2D plane. To measure the size of the event \(\eventA\), we integrate the surface function \(f_{X, Y}\) over the area covered by \(\eventA\). Image Credit: [Chan, 2021].#

Normalization#

Theorem 19 (Joint PMF and Joint PDF)

Let \(\S\) = \(\S_X \times \S_Y\). All joint PMFs and joint PDFs satisfy

Marginal PMF and PDF#

To recover the PMF or PDF of a single random variable, we can marginalize the joint PMF or PDF by summing or integrating over the other random variable. More concretely, we define the marginal PMF and PDF below.

Definition 61 (Marginal PMF and PDF)

The marginal PMF is defined as

and the marginal PDF is defined as

Remark 10 (Marginal Distribution and the Law of Total Probability)

From Theorem 3, one can see that the definition of marginalization is closed related to the law of total probability. In fact, marginalization is a sum of conditioned observations.

In the chapter on Naive Bayes, we see that the denominator \(\mathbb{P}(\mathbf{X})\) can be derived by marginalization.

See the examples section in the chapter on Conditional PMF and PDF for a concrete example.

Example 12 (Marginal PDF of Bivariate Normal Distribution)

This example is adapted from [Chan, 2021].

A joint Gaussian random variable \((X, Y)\) has a joint PDF given by

The marginal PDFs \(f_X(x)\) and \(f_Y(y)\) are given by

Recognizing that the last integral is equal to unity because it integrates a Gaussian PDF over the real line, it follows that

Similarly, we have

Independence#

In the case of bivariate random variables, independence means that the joint PMF or PDF can be factorized into the product of the PMF or PDF of the individual random variables. This is nothing but the definition of independence mentioned in chapter 2 (Definition 10).

More concretely, we define independence below.

Definition 62 (Independent random variables)

Random variables \(X\) and \(Y\) are independent if and only if

Definition 63 (Independence for N random variables)

A sequence of random variables \(X_1\),…,\(X_N\) is independent if and only if their joint PDF (or joint PMF) can be factorized.

Independent and Identically Distributed (i.i.d.)#

Definition 64 (Independent and Identically Distributed (i.i.d.))

Let \(X_1, X_2, \ldots, X_n\) be a sequence of random variables.

We say that the random variables are independent and identically distributed (i.i.d.) if the following two conditions hold:

The random variables are independent of each other. That is, \(P(X_i = x_i | X_j = x_j, j \neq i) = P(X_i = x_i)\) for all \(i, j\).

The random variables have the same distribution. That is, \(\P \lsq X_1 = x \rsq = \P \lsq X_2 = x \rsq = \ldots = \P \lsq X_n = x \rsq\) for all \(x\).

Corollary 10 (Joint PDF of \(\iid\) random variables)

An immediate consequence of the definition of \(\iid\) is that the joint PDF of \(\iid\) random variables can be written as a product of PDFs.

Remark 11 (Why is \(\iid\) so important?)

If a set of random variables are \(\iid\), then the joint PDF can be written as a products of PDFs.

Integrating a joint PDF is difficult. Integrating a product of PDFs is much easier.

The below two examples are taken from [Chan, 2021].

Example 13 (Gaussian \(\iid\))

Let \(X_1, X_2, \ldots, X_N\) be a sequence of \(\iid\) Gaussian random variables where each \(X_i\) has a PDF

The joint PDF of \(X_1, X_2, \ldots, X_N\) is

which is a function depending not on the individual values of \(x_1, x_2, \ldots, x_N\), but on the sum \(\sum_{i=1}^N x_i^2\). So we have “compressed” an N-dimensional function into a 1D function.

Example 14 (Gaussian \(\iid\) (cont.))

Let \(\theta\) be a deterministic number that was sent through a noisy channel. We model the noise as an additive \(\gaussian\) random variable with mean 0 and variance \(\sigma^2\). Supposing we have observed measurements \(X_i\) = \(\theta\) + \(W_i\), for i = 1, \(\ldots\) , N, where \(W_i\) ~ \(\gaussian\)(0, \(\sigma^2\)), then the PDF of each \(X_i\) is

Thus the joint PDF of (\(X_1, X_2, \ldots, X_N\)) is

Essentially, this joint PDF tells us the probability density of seeing sample data \(x_1, \ldots, x_N\).

Joint CDF#

We now introduce the cumulative distribution function (CDF) for bivariate random variables. Similar to the 1-dimensional distribution, the joint CDF is a function that gives the probability in which both \(X\) and \(Y\) are less than or equal to some values \(x\) and \(y\), respectively.

The joint CDF is defined as follows.

Definition 65 (Joint CDF)

Let \(X\) and \(Y\) be two random variables. The joint CDF of \(X\) and \(Y\) is the function \(F_{X, Y}(x, y)\) such that

Definition 66 (Joint CDF (cont.))

If \(X\) and \(Y\) are discrete, then

If \(X\) and \(Y\) are continuous, then

If the two random variables are independent, then we have